With Australia’s new teen social media restrictions set to come into effect within weeks, we’ll soon have our first significant test of whether such restrictions are workable, and indeed, enforceable by law.

Because while support for tougher restrictions on social media access is now at record high levels, the challenge lies in actual enforcement, and implementing systems that can effectively detect underage users. Thus far, no platform has been able to enact workable age checking at scale, however all platforms are now developing new systems and processes in the hopes of aligning with these new legal requirements.

Which also look set to become the norm around the world, following Australia’s lead.

Looking at Australia specifically, from December 10th, all social media platforms will have to “take reasonable steps” to restrict teens under the age of 16 from accessing their apps.

To be clear, the minimum age to create an account on all the major social apps is 14, but this new push is designed to increase enforcement, and ensure that each platform is being held accountable for keeping young teens out of their apps.

As explained by Australia’s eSafety Commission:

“There’s evidence between social media use and harms to the mental health and wellbeing of young people. While there can be benefits in social media use, the risk of harms may be increased for young people as they do not yet have the skills, experience or understanding to navigate complex social media environments.”

Which is why Australia is enacting this new law, with the eSafety Commission now running ads in social apps to inform users of the coming change.

And as noted, many other regions are also considering similar restrictions, based on the growing corpus of evidence that social media exposure can be harmful for young users.

Though detection remains a challenge, and without universal rules on how social apps detect and restrict teens, legal enforcement of any such bill will be difficult.

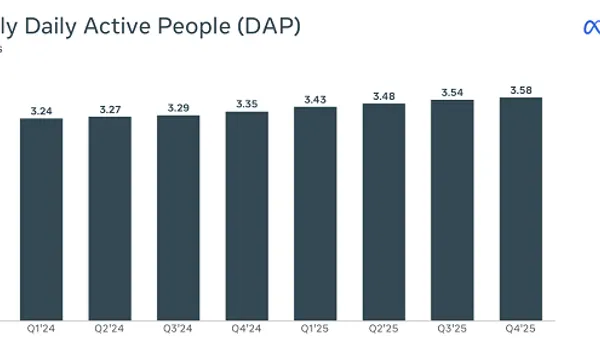

In Australia’s case, it actually conducted a series of tests with various age detection measures, including video selfie scanning, age inference from signals, and parental consent requirements, in order to determine whether accurate age detection is possible using the latest technology, and what the best approach might be.

The summary results from this report were:

“We found a plethora of approaches that fit different use cases in different ways, but we did not find a single ubiquitous solution that would suit all use cases, nor did we find solutions that were guaranteed to be effective in all deployments.”

Which seems problematic. Rather than implementing an industry-standard system, which would then ensure that all social platforms under these new restrictions would be held to the same standards, Australia has instead opted to advise the platforms of these various options, and let them choose which they want to use.

The justification here, as per the above quote, is that different technology has different benefits and limitations in varying context, and as such, the platforms themselves need to find the best solution for their use, in alignment with the new rules.

Though that seems like a potential legal loophole, in that if a platform is found to be failing to restrict young teens, they’ll be able to argue that they are taking “reasonable steps” with the systems they have, whether they’re the most effective systems or not.

Given this, YouTube has already refused to abide by the new standards (arguing that it’s a video platform not a social media app), while every social platform has opposed the push, arguing that it simply won’t be effective. Another counterargument here is that the ban will actually end up driving young people to more dangerous corners of the internet that aren’t being held to the same standards, though Meta and TikTok have said that they will adhere to the new rules, even though they object to them.

Though of course, given the usage impacts, you’d expect the platforms to push back. Meta reportedly has around 450k Australian users under the age of 16 across Facebook and IG, while TikTok looks set to lose around 200k users, and Snap has more than 400k young teens in the region.

As such, maybe the platforms would push back, no matter the detail, though Meta has also implemented a range of new age verification measures of its own to comply with these, and other proposed teen restrictions.

And again, many regions are watching Australia’s implementation here, and considering their own next steps.

France, Greece and Denmark, have put their support behind a proposal to restrict social media access to users aged under 15, while Spain has proposed a 16 year-old access restriction. New Zealand and Papua New Guinea are also moving to implement their own laws to restrict teen social media access, while the U.K. has implemented new regulations around age checking, in an effort to force platforms to take more action on this front.

One way or another, more age restrictions are coming for social apps, so they will have to implement improved measures either way.

The question now is around effectiveness in approach, and how you can set a clear industry standard on this element.

Because telling platforms to take “reasonable steps,” then leaving them to find their own best way forward, is going to lead to legal challenges, which could well render this push effectively useless.