Meta has confirmed that it’s expanding its protections on teen user accounts to all teens around the world who are using Instagram and Facebook, which will ensure greater protections for young users, as well as additional peace of mind for parents.

Meta’s teen accounts, which it first launched in the U.S. last year, automatically limit interactions with certain accounts when its systems determine that the user is under 18. Teen profiles are also subject to additional limitations on what they can view in the app, while they are also shown alerts relating to time spent.

And now, all teens globally will get the same, as another step towards enhancing protections within Meta’s systems.

As explained by Meta:

“A year ago, we introduced Teen Accounts - a significant step to help keep teens safe across our apps. As of today, we’ve placed hundreds of millions of teens in Teen Accounts across Instagram, Facebook, and Messenger. Teen Accounts are already rolled out globally on Instagram and are further expanding to teens everywhere around the world on Facebook and Messenger today.”

So, as Meta notes, all teens using Instagram, Facebook and Messenger will now be subject to these new teen restrictions and protections.

Though a key concern within this is that kids can also lie about their age, and subvert Meta’s teen restrictions.

But Meta’s also working to address this, with advances in its age detection systems, which can now utilize a broad range of factors to determine user age, including who follows you, who you follow, what content you interact with, and more.

These evolving systems, which also benefit from Meta’s developing AI processes, have now made it much harder for teens to cheat their way through, and in combination, these measures should ensure greater protection for all teens.

Which is important, not only to keep young people safe, but also from a regulatory standpoint, with many nations now looking to enact laws to restrict social media access for young users. France, Greece and Denmark are pushing for new EU-wide regulations, while Spain is also considering a 16-year-old access restriction. Australia and New Zealand are also moving to implement their own laws, and Norway is in the process of developing its own regulations.

It seems inevitable that some level of restriction is going to be imposed on teen social media use in many regions, and in this sense, Meta’s getting ahead of the wave, by improving its detection and protections systems. That could be enough to ensure it stays ahead of the game, and avoids major impacts from such.

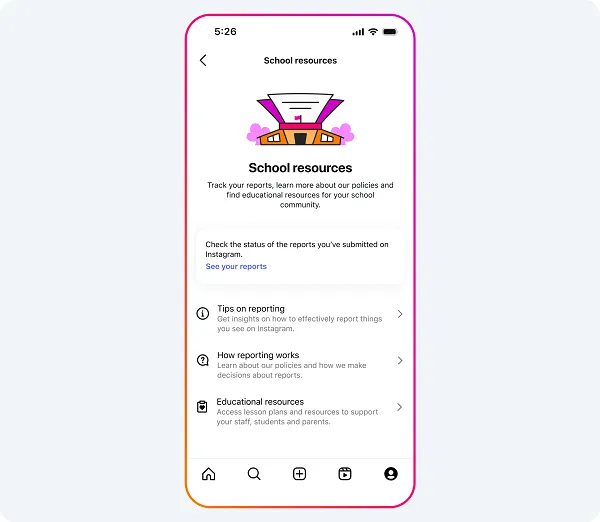

In addition to this, Meta’s also launching a new School Partnership Program for all U.S. middle and high schools, which aims to help educators report safety concerns directly to Meta for quicker review.

“This means that schools can report Instagram content or accounts that may violate our Community Standards for prioritized review, which we aim to complete within 48 hours. We piloted this program over the past year and opened up a waitlist for schools to join in April. The program has helped us quickly respond to educators’ online safety concerns, and we’ve heard positive feedback from participating schools.”

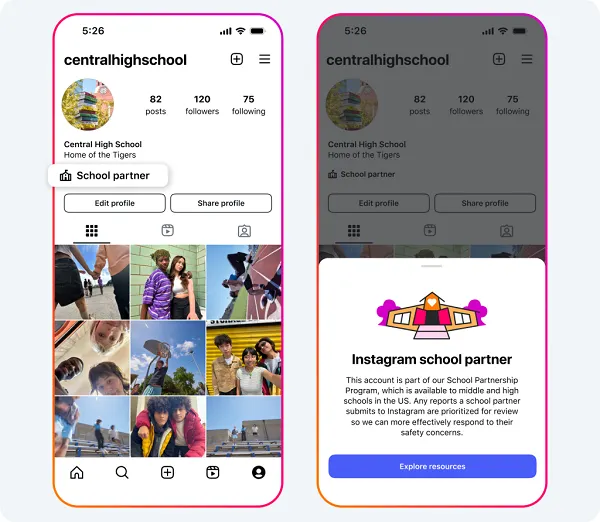

Participating schools will also be able to display a banner on their Instagram profile to show parents and students that they’re an official Instagram partner on this element.

Schools can sign up to the program here.

Finally, Meta has also partnered with Childhelp to develop an online safety curriculum specifically for middle schoolers, with the aim of reaching one million students by next year.

In combination, these initiatives will help to keep young people safe, and improve digital literacy education, which could go a long way in limiting harm in its apps.

Will that be enough to appease regulators, and keep them from implementing more restrictions on Meta? Probably not, but either way, Meta is moving more in line with community expectations, and should be better placed to handle upcoming changes to local laws on this front.