AI chatbots are set to come under regulatory scrutiny, and could face new restrictions, as a result of a new probe.

Following reports of concerning interactions between young users and AI-powered chatbots in social apps, the Federal Trade Commission (FTC) has ordered Meta, OpenAI, Snapchat, X, Google and Character AI to provide more information on how their AI chatbots function, in order to establish whether adequate safety measures have been put in place to protect young users from potential harm.

As per the FTC:

“The FTC inquiry seeks to understand what steps, if any, companies have taken to evaluate the safety of their chatbots when acting as companions, to limit the products’ use by and potential negative effects on children and teens, and to apprise users and parents of the risks associated with the products.”

As noted, those concerns stem from reports of potentially concerning interactions between AI chatbots and teens, across various platforms.

For example, Meta has been accused of allowing its AI chatbots to engage in inappropriate conversations with minors, with Meta even encouraging such, as it seeks to maximize its AI tools.

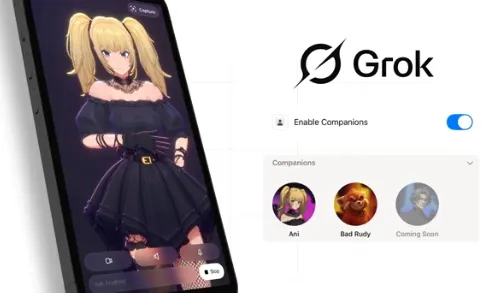

Snapchat’s “My AI” chatbot has also come under scrutiny over how it engages with youngsters in the app, while X’s recently introduced AI companions have raised a raft of new concerns as to how people will develop relationships with these digital entities.

In each of these examples, the platforms have pushed to get these tools into the hands of consumers, as a means to keep up with the latest AI trend, and the concern is that safety concerns may have been overlooked in the name of progress.

Because we don’t know what the full impacts of such relationships will be, and how it will impact any user long-term. And that’s prompted at least one U.S. senator to call for all teens to be banned from using AI chatbots entirely, which is at least part of what’s inspired this new FTC investigation.

The FTC says that it will be specifically looking into what actions each company is taking “to mitigate potential negative impacts, limit or restrict children’s or teens’ use of these platforms, or comply with the Children’s Online Privacy Protection Act Rule.”

The FTC will be looking into various aspects, including development and safety tests, to ensure that all reasonable measures are being taken to minimize potential harm within this new wave of AI-powered tools.

And it’ll be interesting to see what the FTC ends up recommending, because thus far, the Trump Administration has leaned towards progress over process in AI development.

In its recently launched AI action plan, the White House put a specific focus on eliminating red tape and government regulation, in order to ensure that American companies are able to lead the way on AI development. Which could extend to the FTC, and it’ll be interesting to see whether the regulator is able to implement restrictions as a result of this new push.

But it is an important consideration, because like social media before it, I get the impression that we’re going to be looking back on AI bots in a decade or so and questioning how we can restrict their use to protect youngsters.

But by then, of course, it will be too late. Which is why it’s important that the FTC does take this action now, and that it is able to implement new policies.