Meta has published its latest Community Standards Enforcement Report, which outlines all of the content that it took action on in Q2, as well as its Widely Viewed Content update, which provides a glimpse of what was gaining traction among Facebook users in the period.

Neither of which shows any major shifts, though the trends here are worth noting, especially in the context of Meta’s change in policy enforcement to a model more in line with the expectations of the Trump Administration.

Which Meta makes specific note of in the introduction of its Community Standards update:

“In January, we announced a series of steps to allow for more speech while working to make fewer mistakes, and our last quarterly report highlighted how we cut mistakes in half. The latest report continues to reflect this progress. Since we began our efforts to reduce over-enforcement, we’ve cut enforcement mistakes in the U.S. by more than 75% on a weekly basis.”

Which sounds good, right? Fewer enforcement errors is clearly a good thing, as it means that people aren’t being incorrectly penalized for their posts.

But it’s all relative. If you reduce enforcement overall, you’re inevitably going to see fewer mistakes, but that also means that more harmful content will be getting through because of those lower overall thresholds.

So is that what’s happening in Meta’s case?

Well…

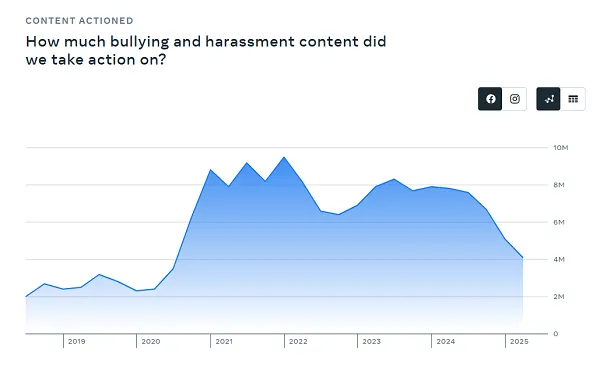

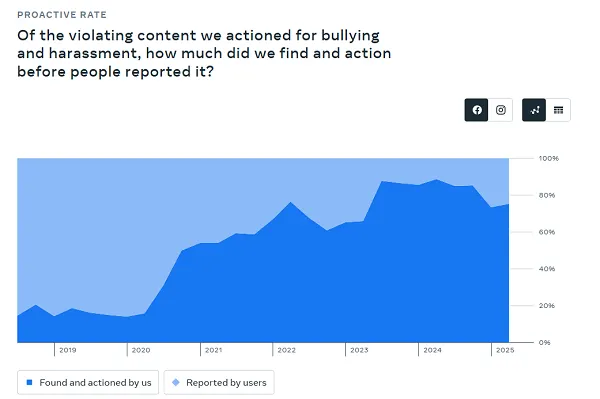

One key measure here would be “Bullying and Harassment,” and the rates at which Meta is enforcing such under these revised policies.

And going on the data trends, Meta is clearly taking less action on this front:

That is a precipitous drop in reports, while the expanded data here also shows that Meta’s not detecting as much before users report it.

So that would suggest that Meta’s doing worse on this front, though false positives would be less.

This perfectly demonstrates how this is a misleading summary, because reduced enforcement means more harm, based on user reports versus proactive enforcement.

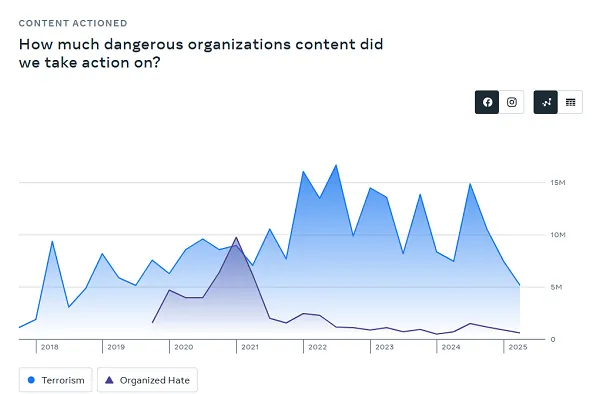

Meta’s also taking less action on “Dangerous Organizations,” which relates to terror and hate speech content.

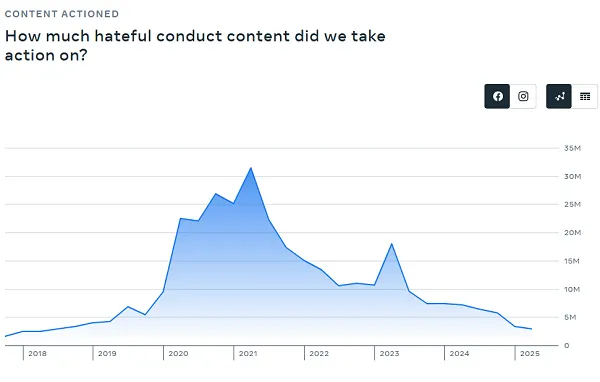

As well as “Hateful Content” specifically:

So you can see from the trends that Meta’s shift in approach is seeing less enforcement in several key areas of concern. But fewer incorrect reports. That’s a good thing, right?

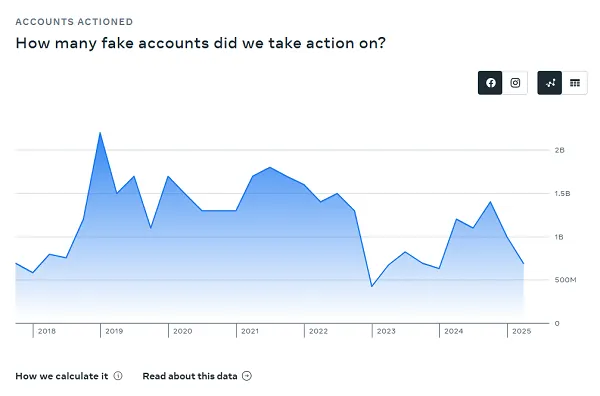

Meta’s also removing fewer fake accounts, though it has pegged its fake account presence at 4% of its overall audience count.

For a long time, Meta pegged this number at 5%, but in its last two reports, it's actually reduced that prevalence figure. For context, in its Q1 report, it noted that 3% of its worldwide monthly active users on Facebook were fakes. So its detection is getting better, but worse than last time.

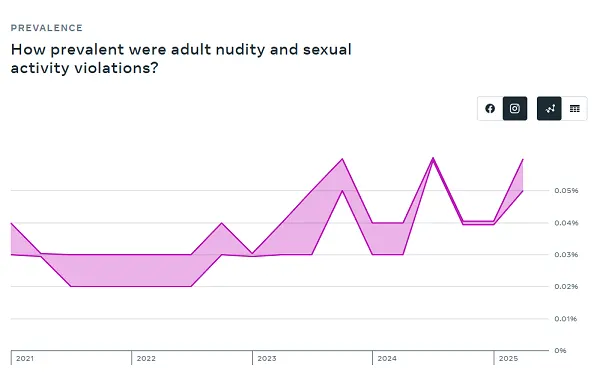

Meta also says that this increase…

...is a bit of a misnomer, because the rise in detections actually relates to improved measurement of nudity and sexual activity violations, not an actual increase in views of such.

Though this:

Still seems like a concern.

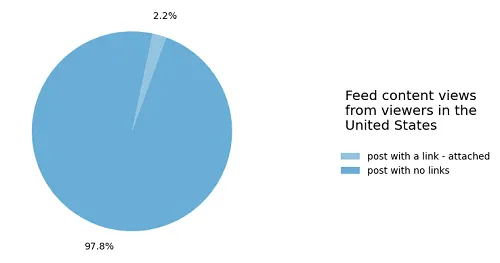

Also, more bad news for those looking to drive referral links from Facebook:

“97.8% of the views in the US during Q2 2025 did not include a link to a source outside of Facebook. For the 2.2% of views in posts that did include a link, they typically came from a Page the person followed (this includes posts which may also have had photos and videos, in addition to links).”

And despite Meta moving to allow more political discussion in its apps, that link-sharing figure is actually getting worse, with Meta reporting that link posts made up 2.7% of overall views in Q1.

So not a lot of link posts getting a lot of views on balance.

The most shared viewed posts on Facebook in Q2 were comprised of the usual mix of topical news and sideshow oddities, including updates about the death of Pope Francis, a brawl at a Chuck E. Cheese restaurant, rappers pledging to buy grills for a local football team if they win a championship, a Texas woman dying of a “brain eating amoeba” and Emma Stone avoiding a “bee attack” at a red carpet premiere.

So Facebook remains a mish-mash of news content, as well as supermarket tabloid rubbish, though no bizarre AI generations this time around (note: two of the most-viewed posts were no longer available).

Meta has also shared its Oversight Board’s 2024 annual report, which shows how its independent review panel helped to shape Meta policy throughout the year.

As per the report:

“Since January 2021, we have made more than 300 recommendations to Meta. Implementation or progress on 74% of these has resulted in greater transparency, clear and accessible rules, improved fairness for users and greater consideration of Meta’s human rights responsibilities, including respect for freedom of expression.”

Which is a positive, showing that Meta is looking to evolve its policies in line with critical review of its rules.

Though its broader policy shifts related to greater speech freedoms will remain the key focus, for the next three years at least, as Meta looks to better align with the requests of the U.S. government.